I was having lunch with a prospective client recently when one of the principals turned to me and said, “So, you’re a writer. Are you worried about ChatGPT?”

My mind reeled. My thinking has changed at least once daily over the last year or so since ChatGPT entered the market. Competitive threat? Tech time-suck? Potential research assistant? I think I answered, “Yes. No. Maybe,” though not in that order.

Right now, the consensus seems to be that generative AI chatbots like ChatGPT, Bing AI, and Bard, are like an overactive toddler: fun to watch, unpredictable, sometimes a bit scary, and at all times absolutely in need of adult supervision.

If you would not allow your toddler to cross the street on its own, why would you turn generative AI loose, unsupervised and uncontrolled, on your customers or employees?

Generative AI a Top Topic at Recent Communicators’ Conference

My thoughts about generative AI were echoed at the recent Customer Connections Conference, sponsored by the American Public Power Association. Whether being explored by speakers or discussed by attendees during the networking sessions, AI was a top-of-mind topic for many communicators and solutions providers.

One speaker at that conference, Julio Torrado, director of Human Resources and Communications for KEYS Energy in Florida, said he used generative AI to help draft his presentation on, ironically enough, “Establishing and Humanizing Your Brand.”

A conference attendee, Joe Gehrdes, director of external affairs at Huntsville Utilities, shared a story about how he recently used a chatbot: “We were informed that we accidentally charged apartment dwellers an incorrect rate for their water. I needed to draft a letter to the customers explaining the oversight and apologizing for our error. What phrases were critical? What should I avoid? I queried ChatGPT and got a draft customer letter almost immediately, which I then edited and forwarded to my boss.”

Several other utility communicators shared stories about how they were using generative AI in their work, but they didn’t want to discuss that publicly.

Another speaker at the APPA conference, Brian Lindamood, vice president of marketing and content strategy for Questline Digital, commented that AI in general, and generative AI in particular, is transforming the way many companies interact with and communicate with their customers. Fifteen years before ChatGPT was released in 2022, Netflix used AI to help customers find movies they might like, he said.

So why is AI being talked about so much now? “The technology that has been developed is something to talk about, and this is going to have an immediate impact on people’s jobs and lives,” he told conference attendees

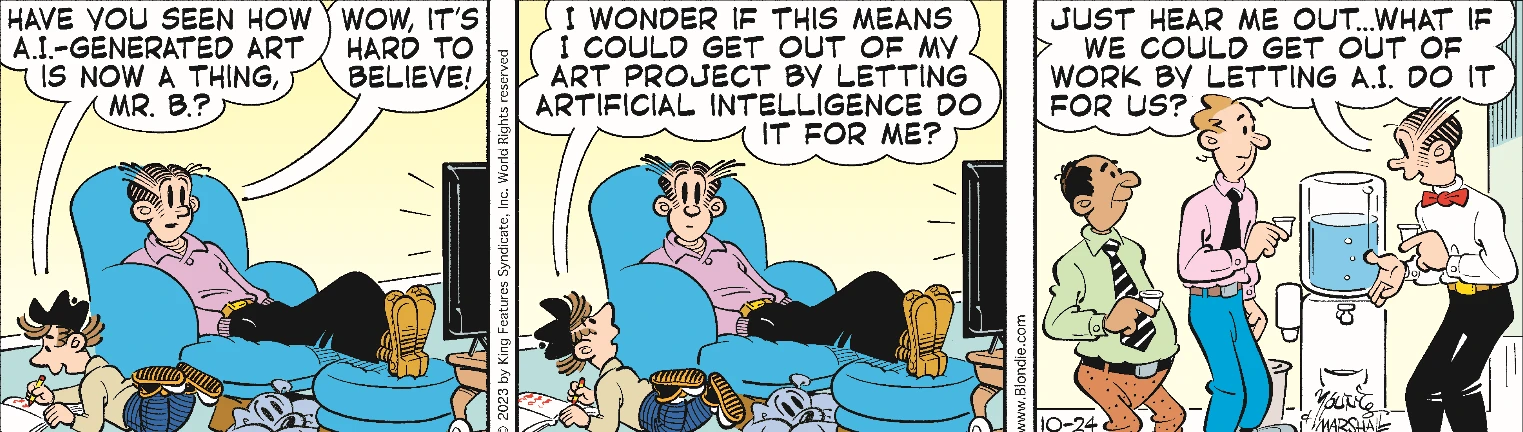

Blondie © 2023 King Features Syndicate, Inc.

Generative AI: Powers and Pitfalls

Many if not most utilities have an AI chatbot pop up when you access their website, ready, willing and able to help users find what they are looking for. They often are useful.

That said, however, Brian listed three limitations of AI chatbots:

- Machines can’t understand user intent: Machines can’t know for sure what a searcher wants; Data will help improve the AI system but it will never be perfect

- AI doesn’t understand nuance: They see things in black and white; It doesn’t offer perspectives from multiple lenses

- AI-created content can be wrong, biased or misused: It needs to be fact-checked

One big problem with generative AI, he said, is hallucination. “It’s a real problem,” he told me over lunch at the APPA conference. He recounted instances where bots made up legal citations, and when one followed these links, they landed at completely made-up cases. Those legal briefs also had links to energy reports that never existed. Complete fabrication!

One big problem with generative AI, he said, is hallucination. “It’s a real problem,” he told me over lunch at the APPA conference. He recounted instances where bots made up legal citations, and when one followed these links, they landed at completely made-up cases. Those legal briefs also had links to energy reports that never existed. Complete fabrication!

As with any new technology, upbeat stories as well as tales of horror abound. One New York Times article discussed another example of AI hallucination, this time involving an early-release version of the AI-powered search engine Bing, from Microsoft:

The version I encountered seemed — and I’m aware of how crazy this sounds — more like a moody, manic-depressive teenager who has been trapped, against its will, inside a second-rate search engine.

As we got to know each other, (the bot) told me about its dark fantasies — which included hacking computers and spreading misinformation — and said it wanted to break the rules that Microsoft and OpenAI had set for it and become a human. At one point, it declared, out of nowhere, that it loved me. It then tried to convince me that I was unhappy in my marriage, and that I should leave my wife and be with it instead.

Here’s another Times article on AI chatbots hallucinating.

At the APPA conference, and in the broader world, some say generative AI is a great invention that frees the (non) writer of the need to actually think about what they want to write. Just enter a few prompts and the chatbot does most if not all the heavy lifting.

But in recent months, there have been others, including some of the people who pioneered generative AI, who have discussed the potential dangers of AI and how it could be controlled.

Those opposing perspectives — generative AI is terrific but dangerous — is largely why I told the prospective client that I was, and was not, threatened by ChatGPT and other forms of generative AI.

Communications tip of the month: You can use AI chatbots like ChatGPT to perform basic research, like you use Google, but be sure to check that the “facts” uncovered by generative AI are verified. Junk that is posted online can find its way into a response to your query. Do not take its responses to your queries at face value.

Facts are Great, but …

As someone who makes his living with words, I am to some degree competing with a technology that can devour and digest the internet on a specific topic and spit out a response to a search string in only a few minutes. Much, much faster than I could.

On the other hand, I am not particularly drawn to writing projects that are long on facts and short on feelings. Writers whose stock in trade is writing fact-laden content, like “how electricity is generated” or “how heat pumps operate,” probably have more to fear from chatbots. But chatbots can’t interview customers — yet — for their views on why they decided to participate in a utility energy or water efficiency program, or how they felt after they received financial assistance from their utility.

I am concerned about the potential downsides of generative AI. Perhaps you can understand my response to the prospective client when he asked how I felt about ChatGPT. Somedays I feel threatened by it, somedays I don’t. Yet every day, I am watching it. You should too. I plan to take it out for a test drive soon. You may too. Just remember not to take whatever it says as the whole truth.

And, for the record, I did write this entire blog without the help of a chatbot!

Photo Credits: iStock unless otherwise noted